Armand here, RPost’s product evangelist armadillo. Peering into my crystal ball, I see that TikTok has lured content creators into performing in every imaginable style, look, speak, movement to provide ByteDance (TikTok parent company) the data it needed to perfect the ability to generate AI clones.

And these AI clones have been perfected.

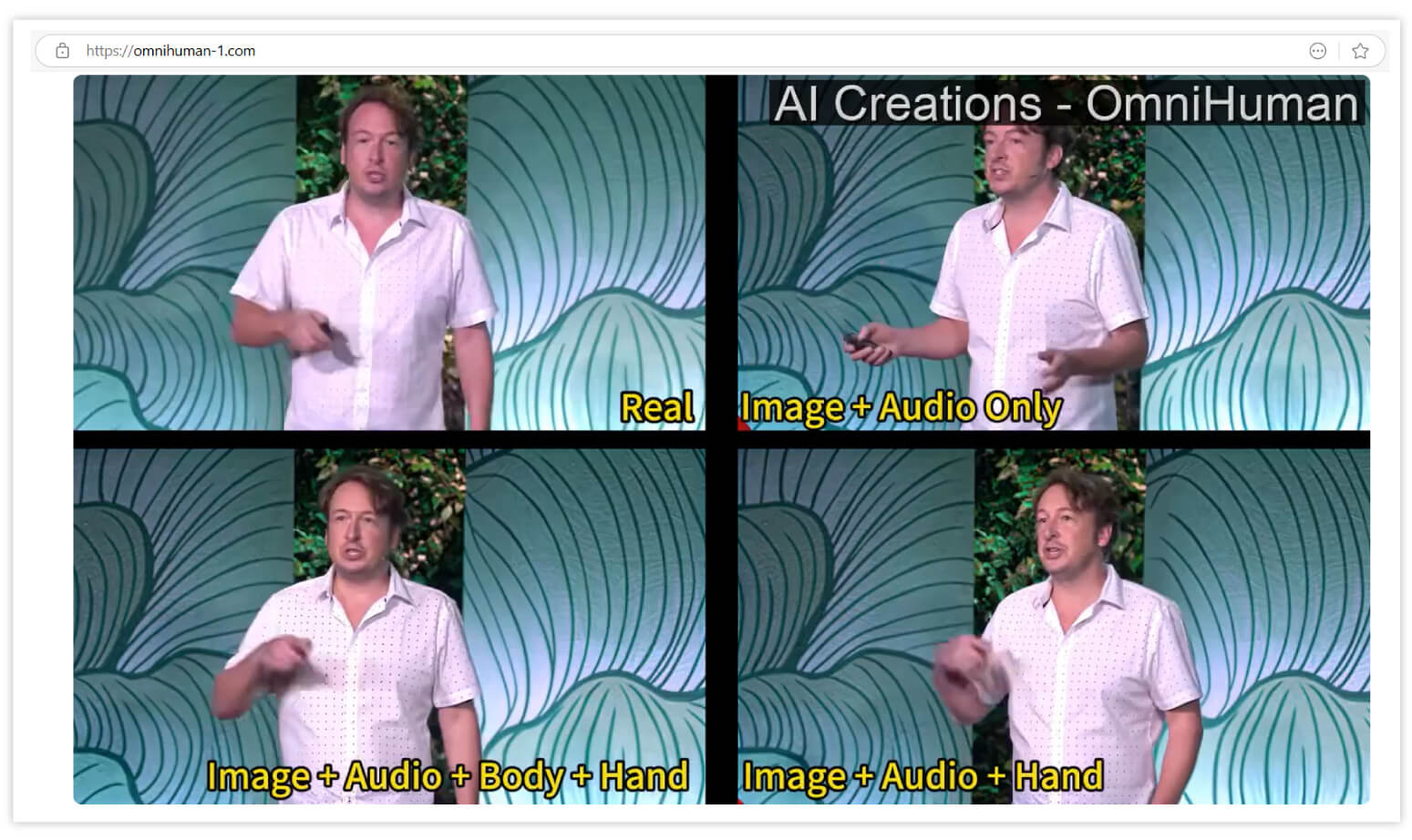

Why do I predict this? ByteDance just released their demonstration site that essentially shows that with imperfect snips of voice, movement, facial smirks, and hand gestures, they can AI generate the human clone. They call this “OmniHuman”.

According to ByteDance, “OmniHuman is an end-to-end AI framework developed by researchers at ByteDance. It can generate incredibly realistic human videos from just a single image and a motion signal—like audio or video. Whether it's a portrait, half-body shot, or full-body image, OmniHuman handles it all with lifelike movements, natural gestures, and stunning attention to detail…”

What does this mean? We are in an irreversible, evermore sophisticated path to making it such that what you see may or may not be real. This is either an impersonator cybercriminal’s dreamland, or it is their bust. Their bust – if it becomes common knowledge that no one can trust what they see, hear, or read.

Dreamland in that it will take many years before these insights become ubiquitous. After all, we still have people being tricked into buying gift cards for cybercriminals with the most obvious email lures.

What to do? At least, arm yourself with a means to be able to identify whether your message information has been siphoned up by cybercriminals to empower them to not only have an AI clone of you or your colleague, but also the AI clone discussing contextually relevant information. Without the “context” the AI clone may be discussing the weather in Tanzania where the other participants of the conversation may be expecting a discussion related to Philly Cheesesteak sandwiches. Context is king for cybercriminals.

This is why you should deny them these details.

With RPost, you can ensure that your team can see the unseen – can identify when cybercriminals are attempting to access your information even at a third party. Then, auto-lock the information to thwart the cybercriminal from seeing who is communicating about what when and where.

These less secure third parties can have these so-called cybercriminal ‘sleeper cells’ where they gain consequential information that can help them create a more successful attack. This reconnaissance provides them with context about who is communicating with whom about what and when. This context in the wrong hands empowers cybercriminals to boomerang back a hyper-contextual hyper-targeted impersonation lure, resulting in losses for the data originator. The losses can cause operational disruption leading to financial loss or worse (e.g., ransomware, data exfiltration, supplier payment fraud).

RPost’s Raptor™ AI model can identify these schemes in action and pre-empt them. We call this PRE-Crime™. Get in touch to learn more about this technology.

February 20, 2026

.jpg)

February 13, 2026

February 06, 2026

January 30, 2026

January 23, 2026